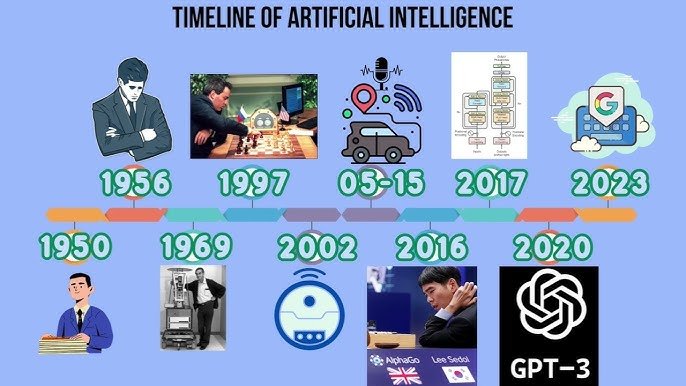

An Overview of the History of Artificial Intelligence Development

1940s-1950s: Theoretical Foundations and Early Exploration of Artificial Intelligence

- 1942: American science fiction writer Isaac Asimov proposed the “Three Laws of Robotics” in his novel Runaround, setting the first ethical guidelines for robot behavior. This concept laid the foundation for future discussions on AI ethics.

- 1943: Neuroscientist Warren McCulloch and mathematician Walter Pitts introduced the “formal neuron” model (the M-P model), paving the way for the development of neural network theory.

- 1949: Donald Hebb introduced the concept of “synaptic plasticity,” offering new perspectives for neurophysiological research on learning and memory.

- 1950: Alan Turing proposed the Turing Test and published his seminal paper Computing Machinery and Intelligence in Mind magazine, establishing the foundation for the field of artificial intelligence.

- 1956: The Dartmouth Conference was held, officially marking the birth of the AI discipline. John McCarthy and others coined the term “Artificial Intelligence.”

1960s-1970s: Diversification of Early AI Research

- 1966: Joseph Weizenbaum developed the world’s first chatbot, ELIZA, which laid the groundwork for natural language processing and human-computer interaction.

- 1969: Marvin Minsky and Seymour Papert published Perceptrons: An Introduction to Computational Geometry, which questioned the effectiveness of the perceptron model, leading to a temporary halt in neural network research.

- 1973: The publication of the Lighthill Report marked the beginning of the “AI Winter,” as many overly optimistic expectations for AI failed to materialize, leading to a sharp decline in funding and support for AI research.

- 1977: The “Wu Method,” introduced by Chinese academician Wu Wenjun, created a sensation in the international mathematics community, enabling the mechanical proof of geometric theorems.

1980s-1990s: Expert Systems and the Revival of Neural Networks

- 1980: Carnegie Mellon University hosted the first International Conference on Machine Learning, signaling the rise of machine learning as a field.

- 1986: Geoffrey Hinton and others introduced the backpropagation algorithm, laying the groundwork for the development of deep neural networks.

- 1987: The second “AI Winter” arrived as the LISP machine market collapsed, severely hindering AI commercialization and research progress.

- 1990: Rodney Brooks proposed the “bottom-up” approach to research, promoting the development of new neural networks that mimicked the structure of the human brain’s neural systems.

2000s: The Rise of Deep Learning and Data-Driven Transformation

- 2006: Geoffrey Hinton introduced Deep Belief Networks (DBNs), published in Science, sparking the deep learning revolution.

- 2008: Google’s launch of speech recognition technology marked the early application of intelligent assistants.

- 2011: Apple released Siri, marking the practical application of voice-activated intelligent assistants.

- 2012: Geoffrey Hinton and his students designed AlexNet, which won the ImageNet Large Scale Visual Recognition Challenge, leading to the rise of deep neural networks.

2010s: Breakthroughs in Large Models and Natural Language Processing

- 2014: OpenAI was founded, promoting open-source AI research and collaborative development.

- 2015: DeepMind introduced AlphaGo, which defeated the world champion Go player Lee Sedol, marking a significant milestone in AI history.

- 2017: Google introduced the Transformer model, which revolutionized the field of natural language processing, becoming a key milestone in deep learning research.

- 2019: OpenAI released GPT-2 and GPT-3, large pre-trained language models that demonstrated remarkable capabilities in natural language processing, significantly advancing the adoption of AI in various applications.

2020s: Maturity of AI and Widespread Application

- 2020: OpenAI released GPT-3, a language model that demonstrated human-level capabilities across various tasks, establishing the foundation for generative AI (AIGC).

- 2020: The rise of diffusion models replaced GANs (Generative Adversarial Networks) and became the new technology for AI-generated art and image synthesis.

- 2021: OpenAI released GPT-3.5, further enhancing the capabilities of large language models, excelling in tasks like conversation and creative writing.

- 2023: With the development of multimodal AI technologies, AI systems began to handle not only text but also images, sound, and video, ushering in a new era of intelligent systems.

- 2024:On February 15, 2024, OpenAI released the AI video generation model Sora.

- On July 25, 2024, OpenAI introduced SearchGPT, an AI-powered search engine that is currently in the testing phase.

(as of July 25, 2024)